Tags

Economist, fake news, GPT-2, John Seabrook, Johnson, Santa Barbara, The New Yorker, University of California, Writernator

You may recall my sci-fi romance, A Heaven For Toasters, taking place some 100 years in the future. Leo, the android protagonist, exhibits some distinctly human characteristics—including the ability to feel human emotions. But could Leo become a writer or poet?

The Economist recently shared an article cheekily called Don’t Fear the Writernator – a reference to literature’s terminator. What prompted this was the news that researchers have come up with a more powerful version of automated writing.

So, how afraid should we be? Is Leo about to compose a sonnet to woo Mika?

Automated Writing

Automated writing, in case you’re unfamiliar with the term, is best exemplified by Gmail’s Smart Reply feature. Gmail offers brief answers to routine emails. So, if someone asks you “shall we meet up for lunch?” Gmail suggests a variety of appropriate responses, for example, “Sure!”

More strikingly, Smart Compose kicks in as you write, suggesting endings to your sentences.

The system makes some sophisticated statistical guesses about which words follow which. Imagine beginning an email with “Happy…” Having looked at millions of other emails, Gmail can plausibly guess that the next word will be “birthday”.

Automated Writing, New Yorker-style

Now, New Yorker’s John Seabrook recently described a more powerful version of this technology, called GPT-2.

GPT has been refined with 40 gigabytes-worth of back-issues of the New Yorker. This lets it ably mimic the magazine’s style.

How Scared Should You Be?

Will you read my book?

From testing GPT-2, Seabrook realized that what eludes computers is creativity. By virtue of having been trained on past compositions, they can only be derivative. Furthermore, they cannot conceive a topic or goal on their own, much less plan how to get there with logic and style.

At various points in the online version of his article, readers can see how GPT-2 would have carried on writing Seabrook’s piece for him. The prose gives the impression of being human. But on closer inspection, it is empty—even incoherent.

To truly write, you must first have something to say. Computers do not. They await instructions. Given input, they provide output.

Such systems can be seeded with a topic, or the first few paragraphs, and be told to “write.” While the result may be grammatical English, this should not be confused with the purposeful kind.

As the Economist points out, to compose meaningful essays, the likes of GPT-2 will first have to be integrated with databases of real-world knowledge. This is possible at the moment only on a very limited scale. Ask Apple’s Siri or Amazon’s Alexa for a single fact—say, what year “Top Gun” came out—and you will get the answer. But ask them to present arguments in a debatable case—”Do gun laws reduce gun crime?”—and they will flounder.

Writernator’s Limitations

An advance in integrating knowledge would then have to be married to another breakthrough: teaching text-generation systems to go beyond sentences to structures.

Seabrook found that the longer the text he solicited from GPT-2, the more obvious it was that the work it produced was gibberish.

Each sentence was fine on its own. Remarkably, three or four back to back could stay on topic, apparently cohering. But machines are eons away from being able to recreate rhetorical and argumentative flow across paragraphs and pages.

Not only can today’s journalists expect to finish their careers without competition from the Writernator—today’s parents can tell their children that they still need to learn to write, too.

Fake News and Writernator

A more plausible worry is that such systems will be able to flood social media and online comment sections with semi-coherent but angry ramblings that are designed to divide and enrage.

This is already happening, as a recent study on fake news from the University of California, Santa Barbara shows. Artificial intelligence allows bots to simulate Internet users’ behavior (e.g., posting patterns) which helps in the propagation of fake news.

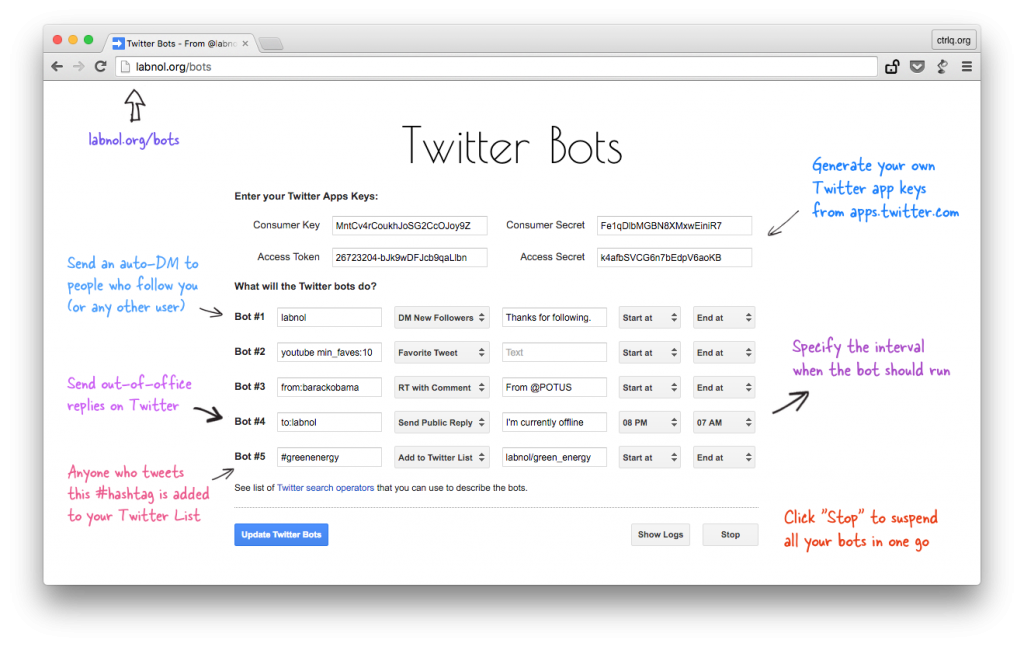

For instance, on Twitter, bots are capable of a number of social interactions that make them appear to be regular people. They respond to postings or questions from others based on scripts that they were programmed to use. They look for influential Twitter users (Twitter users who have lots of followers) and contact them by sending them questions in order to be noticed and generate trust.

They also generate debate by posting messages about trending topics. They can do this by hunting for, and repeating, information about the topic that they find on other websites.

Source: Amit Agarwal’s (2017) fairly accessible guide, “How to Write a Twitter Bot in 5 Minutes.”

Bots’ tactics work because the average social media user tends to believe what they see or what’s shared by others without questioning. So bots take advantage of this by broadcasting high volumes of fake news and making it look credible.

But bots aren’t apparently that good at deciding what original comments by other users to retweet. They’re not that smart.

People are smart. But people are emotional. Real people also play a major role in the spread of fake news.

Fighting fake news will, then, get harder with the advent of increasingly sophisticated software. Most of the time, however, the spread of fake news is a consequence of real people, usually acting innocently. All of us, as users, are the biggest part of the problem.

Perhaps a flood of furious auto-babble will force future readers to distinguish between the illusion of coherence and the genuine article. If so, the Writernator, much like the Terminator, would even come to do the world some good.

I wrote about automated writing a while back. The sad thing is that there are already computer-generated, formulaic novels and poetry out there… soulless, but saleable.

LikeLiked by 2 people

Soulless but saleable sounds like a dreary combination for humanity!

LikeLiked by 1 person

I can think of few things worse…

LikeLiked by 2 people

Yikes! I didn’t know.

LikeLiked by 1 person

I’d say it is quite alarming, but you can feel the difference in the writing.

LikeLiked by 2 people

Can you please send us a link to that post, Sue?

LikeLike

There were a couple, Nicholas, so I’ll send both links. https://scvincent.com/2017/03/18/are-computers-the-death-knell-for-writers-ask-google/

LikeLike

Super, thanks! For some reason (probably because of the links) this ended up in the Spam, so I apologize for the delay in responding!

LikeLike

No worries, Nicholas… the vagaries of WP 😉

LikeLiked by 1 person

Reblogged this on Chris The Story Reading Ape's Blog.

LikeLiked by 1 person

This is a great topic. I’ve worked with a number of clients who specialize in natural language processing R&D, and I’m convinced that replacing most humans with bots is our inevitable future. There’s simply no way we can compete with limitless content creation as a fixed one-time cost, once it becomes a fully viable alternative to current human productivity models. The good news is, we definitely have some time before it happens at scale! The time to write is now! That’s what I’m doing.

LikeLiked by 1 person

I’m not that pessimistic, personally. It’s one thing to create content; it’s quite another to create meaningful content. The latter will always be prized.

LikeLiked by 1 person

I would never suggest that meaningful human-generated content is inferior or undesirable! Unfortunately, there are plenty of people out there who will happily put us out of work, in exchange for short-term ROI. Sometimes truth sounds pessimistic, but I believe promoting awareness will inspire more people to push back against the scourge of “cheap AI lit.”

LikeLiked by 1 person

Thankfully, we’re still decades away from any meaningful prose coming out of AI 🙂

LikeLike

Reblogged this on K Morris – Poet and commented:

I agree with the points made in this post. So far as poetry is concerned, a poet (of the human variety) is possessed of emotions, which express themselves through his/her poetry. Computers (however smart) are not capable of feeling emotions, so its difficult to imagine how a machine could produce poems of real worth. Kevin

LikeLiked by 2 people

Yes, Kevin. i agree. And poetry is about emotions. So we, who write poetry are safe for the foreseeable future.

LikeLiked by 1 person

I honestly can’t imagine a bot that creates poetry without plenty of human assistance… not any good poetry, anyway!

LikeLike

Yeah. Probably don’t have to worry unless one of two things happen. A.I.’s that perfectly replicate the minds of famous authors would mean those series and ideas continue getting built forever. Hard to compete with an army of immortal A-list authors in this day and age. The other scenario would be the majority of mankind being happy with only the most basic story that is always the same. As long as the thing is flashy and exciting, they won’t care if the story is actually good. I’m more nervous about that second option since you can see it a bit in certain mediums.

LikeLiked by 2 people

I’ve often wondered about that myself, with the endless remakes and eye-candy effects. Even a success like Mandelorian felt rather light in content…

LikeLike

Just finished that. I realized that the biggest draw to it was a pseudo-reboot of a popular character. The show wouldn’t be nearly as popular without baby Yoda.

LikeLiked by 1 person

Good point! And one I completely agree with.

LikeLike

I’m comforted that AI fiction tends to break down the longer it gets, but I’m alarmed at the fake news, short AI-generated capability.

LikeLiked by 1 person

Same here. Miscreants are always one step ahead, it seems…

LikeLike

I’m quite sure I couldn’t enjoy a story written by a computer. Ugh! Horrible idea. Let’s hope it never happens.

LikeLiked by 1 person

Indeed!

LikeLiked by 1 person

As Sue said, this is already happening with computer generated novels for sale in some genres. People are buying them without knowing that, I’m sure. But there will always be a market for the passionate, and bizarre. I doubt a computer could compete with Terry Pratchett, Douglas Adams, or Franz Kafka. 🙂

Best wishes, Pete.

LikeLiked by 3 people

I haven’t heard of any computer-generated novels, Pete. I’d be very surprised if that happened without people knowing it, as it would be a major hype point: “buy the first novel written by a computer!” I know I’d buy that 🙂

It’s all pretty experimental at the moment. But who knows what’s going to happen down the line?

LikeLiked by 1 person

I’ve been described as a pessimist in the past. While I acknowledge the good that comes from technological advancement, I am rather skeptical of our future in the land of AI. However, when it comes to robowriting, I am somewhat calm. It’s just additional motivation to sharpen my craft.

LikeLiked by 1 person

Like most technological innovations, I see robowriting (love that term) as a tool–more of a virtual assistant to our writing than a threat to it.

LikeLiked by 1 person

Interesting stuff. I suppose from now on, where unknown new writers’ output is concerned, the watchword should be “caveat emptor”! 😉 Cheers, Jon.

LikeLiked by 1 person

I’m sure that readers will know in seconds whether a novel is computer-generated 🙂

LikeLiked by 1 person

Reblogged this on Wilfred Books and commented:

Is computer-generated writing convincing yet? Food for thought here.

LikeLiked by 1 person

I’m not going into panic mode yet. Everyone hates auto-correct and spell check is still far from error free. Great literature, like poetry, is much more than correct grammatical sentences. It’s about real humans expressing real emotions and ideas we can relate to in our own lives. Humans have always gone through dark times where it seems people embrace ignorance, but we always bounce back. There are still plenty of people in the world who want more than quick produced, mindless entertainment. The biggest danger I see from this is the bots. Too many on social media are eager to play follow the leader, with no thought of who is giving them information. Great post. It sure gives us something to think about.

LikeLike

I agree with everything you’ve said! I can easily see AI used as virtual assistants but not as writers. With the exception of bots, and that’s only because it’s humans controlling them.

LikeLike

This fake news (or lies as it used to be called) is worrying, but I’m pleased we won’t be put out of business just yet.

I just ran a spell and grammar check on my current wip and some of the suggestions were laughable at best, and incomprehensible at worst. I suppose AI is a number of steps above this, but it does give an idea of the problems.

LikeLiked by 1 person

Like you, I think the problem’s exaggerated, at least where writing is concerned. However, manufacturing robots will put a lot of people out of work and that will cause a huge disruption. Unfortunately, we’re woefully unprepared for this, as politicians seem to ignore the working man’s problems (unless they exploit their worries to get elected, of course).

LikeLiked by 1 person

What a scary robot writer, you have choosen. Lol But, indeed i think there will never be a equal writing like by human writers. More i think we will get more robot writers in our state offices. This could enrich our lives. 😉 Thank you for sharing this useful posting. Michael.

LikeLiked by 1 person

Lol–yes, it’s quite the image 😀

I agree with you. AI will assist our writing but I can’t see it competing with us. At least not for a good few decades!

LikeLiked by 1 person

So true, Nicholas! At least AI will only compose new writings out of a database of written books. I think we will have to look for plagiarism, much more. Best wishes, Michael

LikeLike

Reblogged this on Author Don Massenzio and commented:

Check out this intriguing post from Nicholas Rossis’ blog that asks the question: Will Computer-generated Writing Replace the Human Kind?

LikeLiked by 1 person

An interesting insight. Thank you for drawing this to our attention Nicholas. Definitely something to keep an eye on.

LikeLiked by 1 person

I’m pretty sure it will be hard to miss in the coming years. More tomorrow 🙂

LikeLike

Pingback: Week In Review – Joan Hall

Reblogged this on Kim's Musings.

LikeLiked by 1 person